Tools & Resources

AI typically refers to systems that perform complex tasks involving reasoning, decision-making, and creative problem-solving. Today, “AI” generally means Large Language Models (LLMs), which are systems designed for understanding and generating natural language.

At Cornell, the AI Innovation Hub has been working hard on developing and maintaining an innovative, scalable, and secure platform of cutting-edge tools and resources that are available to virtually all groups on campus. To help you identify which solutions best fit your needs, here’s an overview of the AI landscape and what’s available.

LLMs are the “brain” that power AI applications. Model developers like OpenAI, Anthropic, Google, and Meta create and deploy these foundational models (e.g., GPT-5, Sonnet 4.5, Gemini 2.5 Pro, Llama 4). In many cases, these same developers also build user-friendly chat applications, such as ChatGPT, Claude, Copilot Chat, and Gemini Studio, that serve as graphical interfaces to their models. These applications also extend the models’ capabilities with integrated tools (the “limbs”) such as web search, image generation, and code execution.

Please note that not all applications have all tools available within them, and new capabilities are added regularly, so the ecosystem is constantly evolving.

A sample of tools that can be used with LLMs:

Models can also be accessed directly via APIs (Application Programming Interfaces), which allow developers to integrate LLMs into custom applications through code. This approach offers greater flexibility but requires programming expertise and separate configuration for each tool or capability you want to include.

Picking the right model, whether within chatbot applications, or via the API, requires careful consideration of its capabilities and features. No two models are the same, as they differ across several key dimensions:

- Modalities: Models support different input and output types. Some handle text only, while multimodal models can process and generate vision (images, video) and audio. Multilingual proficiency also varies, as some excel across dozens of languages, while others are optimized primarily for English.

- Model Size: LLMs range from nano models (millions of parameters) to large models (hundreds of billions of parameters). Smaller models are faster and cheaper but offer basic capabilities. Larger models are slower and more expensive but provide superior reasoning, nuance, and accuracy for complex tasks.

- Reasoning Capability: Models span from simple text completion to sophisticated multi-step planning. Reasoning enabled models break down complex problems into subtasks, revising their approach based on intermediate results.

- Context Window: Measured in tokens (roughly 0.75 words each), this determines how much information a model can process at a given time. Models range from 4K tokens (~3,000 words, or a few pages) to 1M+ tokens (~750,000 words, or multiple books). Larger capacity enables processing complete documents and maintaining extended conversations. Please note, this is separate from model size: a small model can have a large context window, and vice versa.

Safety Features: Models include varying levels of content filters, guardrails, and enterprise controls. Consumer versions offer basic safety; enterprise versions add compliance features like audit logs, data retention policies, and custom configurations for regulated industries.

While chatbots provide conversational interfaces, AI agents represent a more autonomous approach to working with LLMs. Agents are systems that can independently break down complex tasks, decide which tools to use, execute multi-step workflows, and adapt their approach based on results, all with minimal human intervention. For example, an agent could be given a high-level goal to analyze quarterly sales data and create a summary report, and it would autonomously retrieve the data, perform the analysis, generate visualizations, draft the report, and even email it to stakeholders. By combining LLM reasoning with workflow automation and tool integration, agents can handle sophisticated, end-to-end processes that would otherwise require constant human oversight and manual coordination between different systems.

The table below shows generative AI tools currently available from CIT, along with the maximum level of confidential Cornell data approved for each tool. Your unit’s local IT department may offer additional tools beyond this list.

To request access to any of these services, contact ai-support@cornell.edu and we’ll help get you set up.

- Pilot

Cornell AI Platform

- General purpose

Microsoft 365 Copilot Chat

- Under Review

OpenAI ChatGPT Edu

- Under Review

Claude

Zoom AI Companion

- General purpose

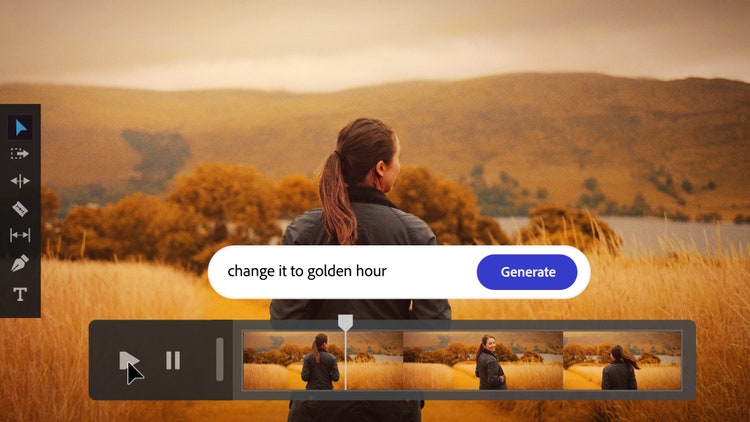

Adobe Firefly