How I Built a 3,600 Line Feature in 4 Hours Without Writing a Single Line of Code

Hi, I’m Fermin Romero III. I’m the Application Development Manager for Student Services Information Technologies at Cornell University, and I volunteer as a tech lead with the AI Innovation Lab team. Last semester, our team presented Cornell’s AI Platform at AWS re:Invent 2025 in Las Vegas, where we shared how the university is democratizing AI development while maintaining security and compliance.

One of the projects I lead through the AI Innovation Lab is Campus Groups Audit Automation, which processes 10,000 reimbursement requests per semester for 1,600 student groups. That project came from a conversation with Jonathan Hart, a finance colleague who described the manual grind of auditing all those requests. I recognized an AI use case, pitched it to the lab, and assembled a team to experiment with a solution.

What I’m about to describe happened during development of that system. It represents something I think will fundamentally change how we build software.

The numbers: 3,631 lines of code added. 4 hours from start to push. 0 lines written by me personally. 34 files changed across frontend and backend. 16 commits executed autonomously.

This is what happened when I combined Claude Code with an open source skill set called Superpowers to implement a complete AI integration module for our production application.

What Are These Tools?

Claude Code is an agentic coding tool from Anthropic that lives in your terminal and IDE. It understands your codebase, executes tasks, and handles git workflows through natural language commands. Unlike autocomplete or code suggestions, it operates as an agent that can examine files, run commands, make edits across multiple files, and iterate on problems until they’re solved. At Cornell, we access Claude through the CIT AI API Gateway, the same platform we presented at re:Invent.

Superpowers is an open source collection of “skills” for coding agents created by Jesse Vincent (github.com/obra/superpowers). It provides workflows that activate automatically based on context. Before writing code, it asks what you’re really trying to do and builds a specification through conversation. After you approve the design, it creates detailed implementation plans with small, verifiable tasks. Then it dispatches subagents to work through each task, reviewing their output and continuing forward autonomously.

The Project

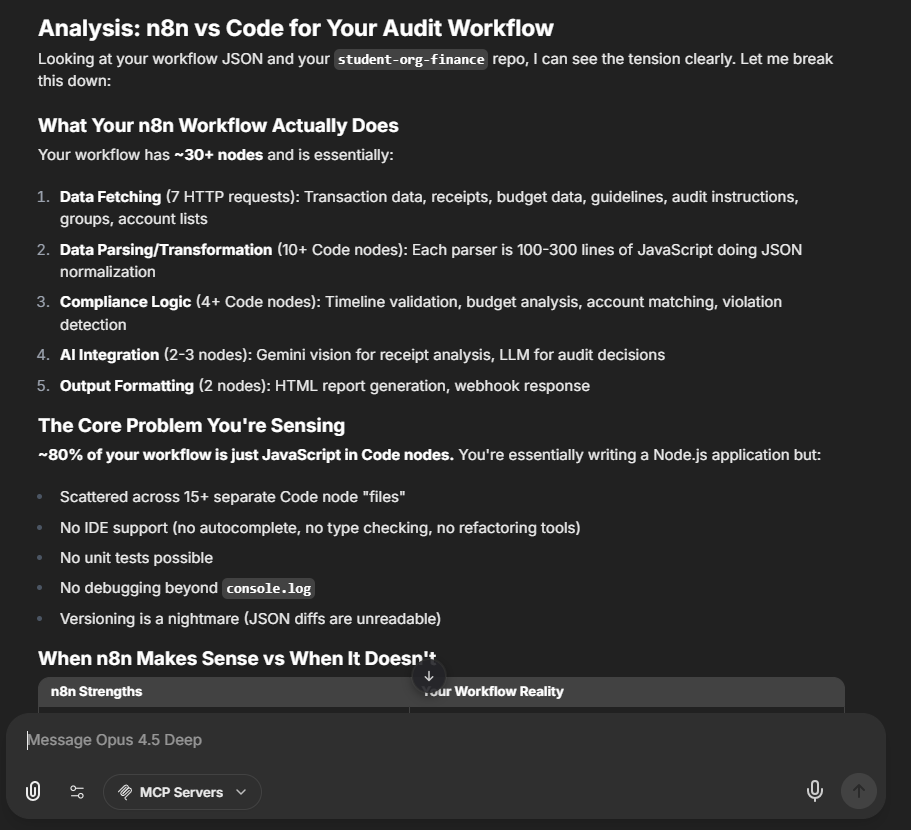

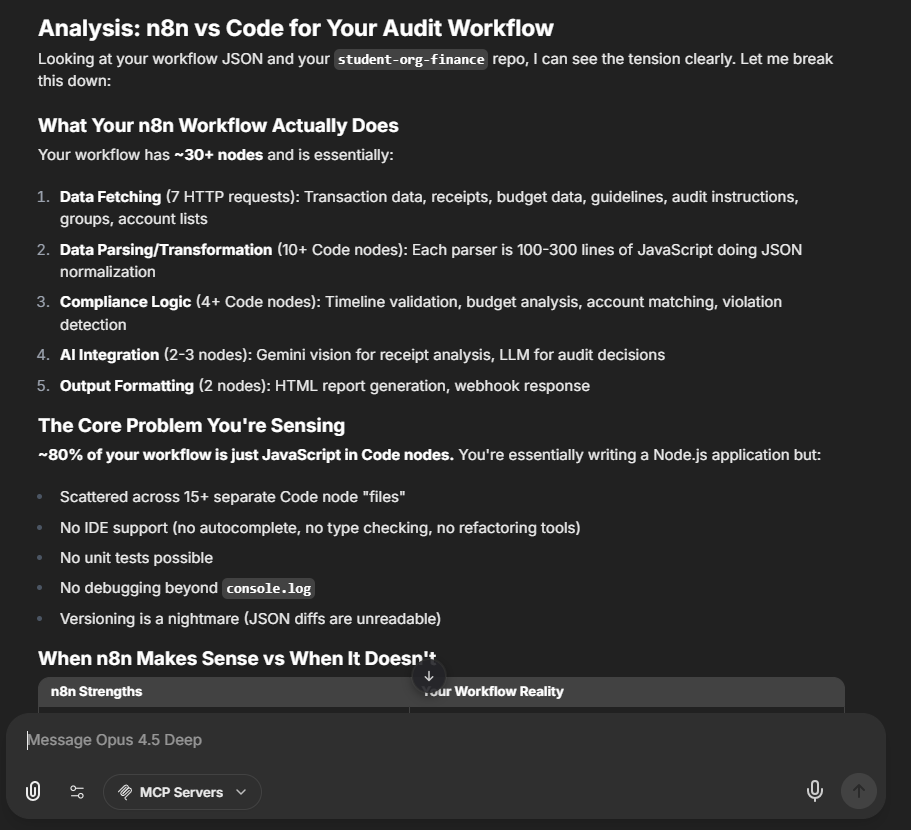

Our audit application had its original workflow living entirely inside N8N, a visual workflow platform. We had built transaction analysis, receipt OCR, compliance checking, and audit decisions there. The problem was that about 80% of that N8N workflow was JavaScript scattered across 15+ separate code nodes with no IDE support, no type checking, and no unit tests possible.

The goal was to migrate all that logic into our Express/TypeScript backend, leaving N8N to handle only AI orchestration.

Day One: Building the Specification

The work began the day before coding. I exported our complete N8N workflow as JSON and brought it into a conversation with Claude Opus 4.5. I asked Claude to walk me through what it does, step by step. By having it explain the workflow back to me, I was loading all that context into the conversation.

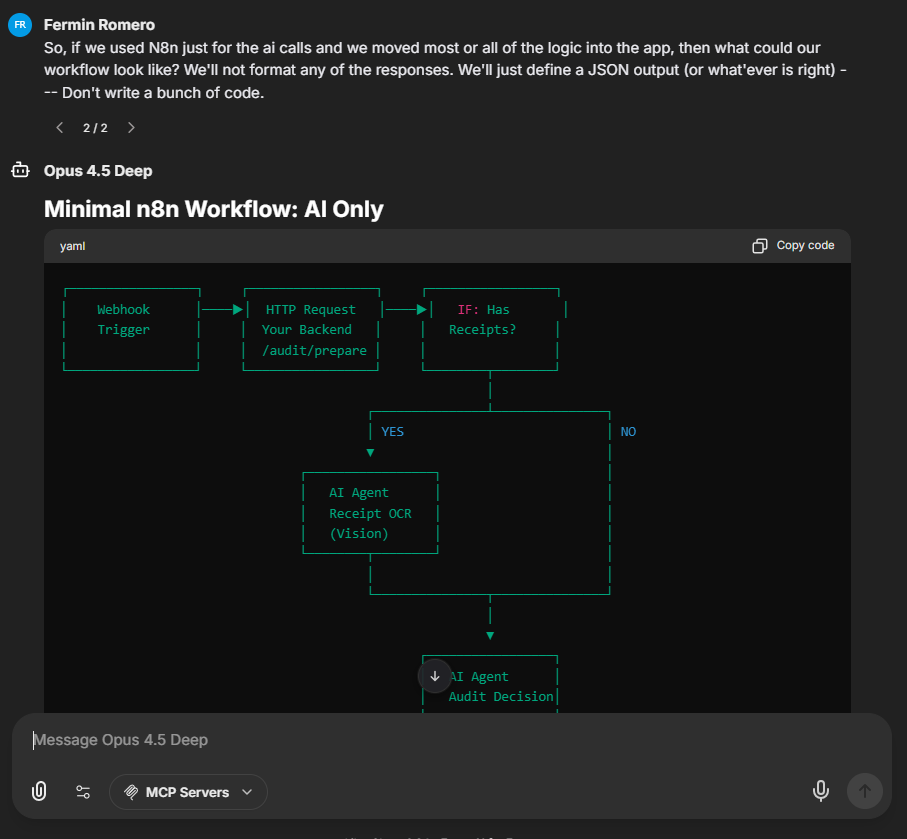

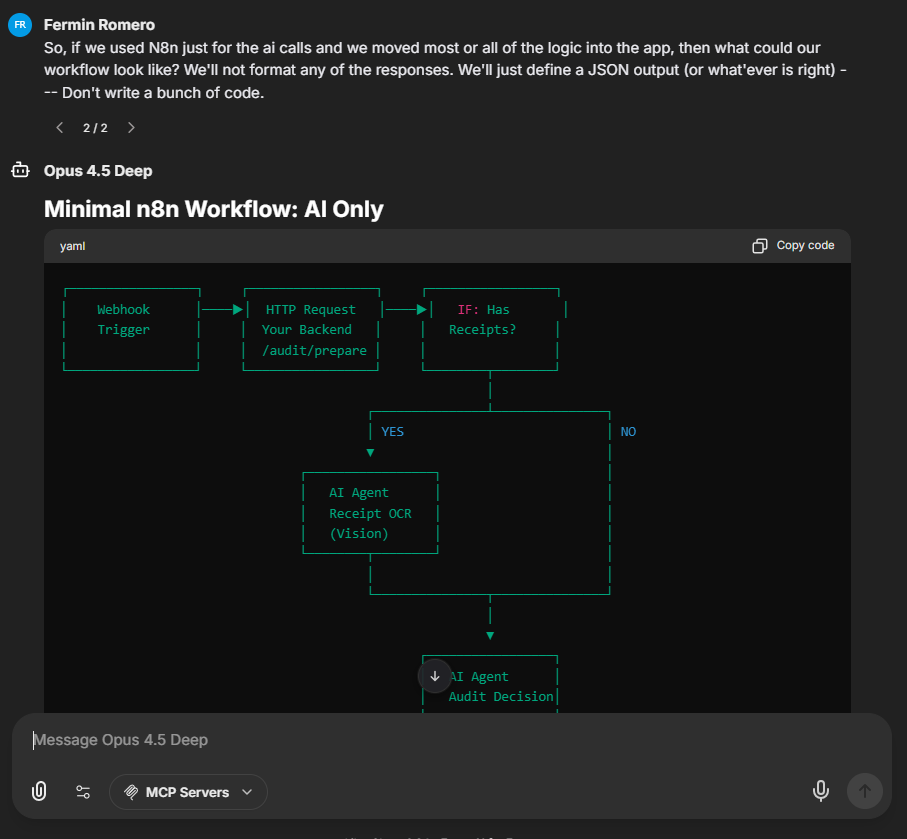

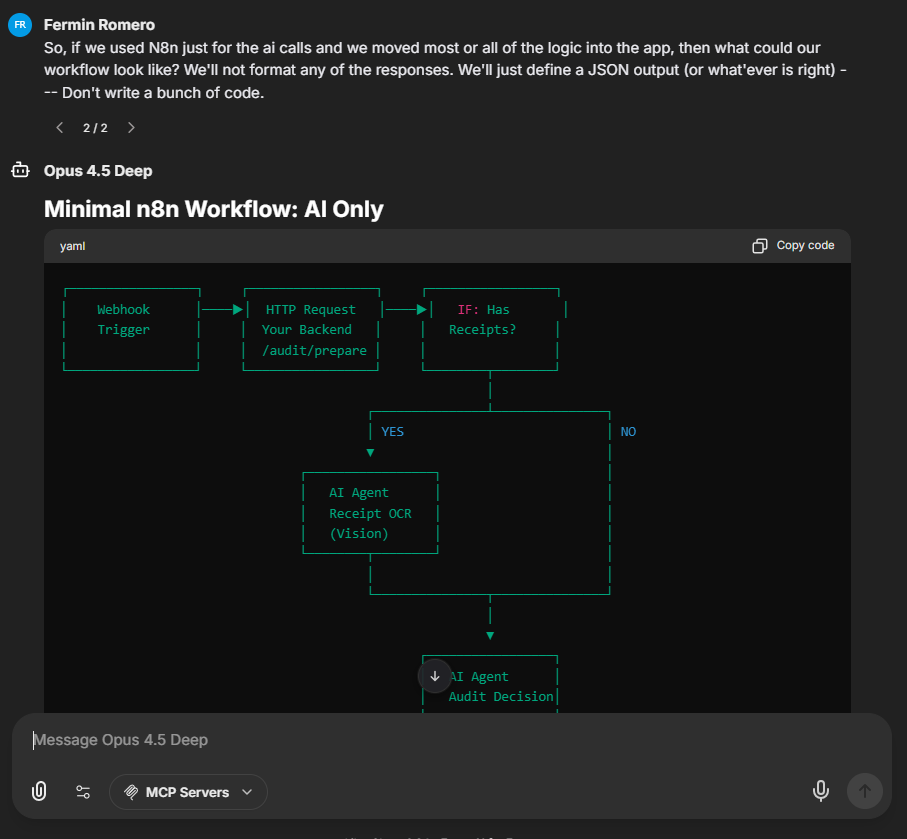

Then I asked: “If N8N were just used for the AI calls, what could our workflow look like?”

What followed was a collaborative design session. Claude asked questions about where transaction data comes from, how payers work, whether final decisions happen on the backend or with AI. We went back and forth, whittling the workflow from 30+ calls to just 2.

Along the way, we made explicit decisions. Backend handles math: timeline compliance, funding compliance, account lookups. AI handles semantic understanding: detecting gift cards or alcohol on receipts, verifying itemization, judging if business purposes are adequate.

By the end, I had a comprehensive scope document covering folder structure, files to change, API endpoints, and data flow. Claude estimated about 4 weeks of work.

Day Two: 4 Hours with Superpowers

At 8 AM the next morning, I opened VS Code with Claude Code and Superpowers installed and entered this prompt:

“We are already on the new feature branch, but you can check. I want you to read this scope doc, I want you to inspect the code, I want you to build a plan to implement the entire scope doc, and execute the plan.”

Claude Code loaded the brainstorming skill automatically, examined the scope document, inspected the codebase, and verified we were on the correct branch. It transitioned to plan creation, built detailed tasks, then asked whether I wanted subagent driven or parallel execution. I chose subagent driven.

It started asking permission to run commands. NPM installs, starting the dev server, running tests. I would approve and sometimes select “don’t ask again for similar commands.”

Then it went quiet. For 15 minutes at a time, it would churn through the plan, editing files, running tests, making commits. I would glance over between meetings to see it was still working.

At 11 AM it reported completion and readiness for testing. After starting the dev server and running test transactions, it was working. Not perfect. Some formatting issues and edge cases. But the core functionality was there.

What Got Built

The pull request shows a new backend module with controllers, services, parsers for survey submissions and budget data, validators for timeline and policy rules, and AI service integration for Gemini vision analysis. Frontend components for reviewing AI analysis results, displaying receipt images, and triggering fresh analysis. Configuration updates for N8N integration, bearer token authentication, PDF to PNG conversion, and updated middleware.

The code follows our existing patterns. It integrated cleanly with our authentication, session management, and error handling.

What I Actually Did

I did not write code. What I did was load context by spending time making sure Claude understood our existing system. I made architectural decisions when Claude presented options. I steered by checking progress and approving commands. I tested by running actual transactions and identifying edge cases. I fixed bugs in subsequent sessions.

I also need to give a big shoutout to my colleague Phil Williammee, who I couldn’t have done this without. Phil put in the time to review the pull request and helped me figure out the best way to implement this without breaking everything. AI can generate code, but having an experienced developer review that code and catch issues before they hit production is still essential.

The scope document was the crucial artifact. It was detailed enough that Claude Code could read it, understand it, and implement it without constant guidance.

Context Is Everything

The more context you provide, the more accurately the AI can reproduce what you want. I had the complete N8N workflow JSON, a conversational design session that explored alternatives, a scope document covering architecture and edge cases, and an existing codebase with established patterns for Claude to follow.

Claude wasn’t inventing architecture. It was implementing a detailed specification within an existing codebase that demonstrated our conventions. Superpowers provided the workflow discipline to execute systematically rather than jumping around.

What This Means

This feature would have taken at least a week traditionally. Maybe more, given that I’m not a professional developer. I’m an IT manager who understands how our apps work and can read code, but I don’t write TypeScript day to day.

With these emerging skill systems, the gap between understanding what you want and having working code is collapsing. If you can clearly articulate the specification and provide enough context about your existing system, execution becomes almost automatic.

This doesn’t replace the need for understanding. I still had to know what questions to ask, recognize when suggestions didn’t fit our architecture, make judgment calls, and test the output. What changes is that the bottleneck shifts from “can I write this code” to “can I specify this clearly enough.”

Fermin Romero is the Manager of Business Applications at Cornell’s Student Services IT. He spent his early career maintaining avionics on F-15E Strike Eagles in the Air Force, where I learned that the best technology is the kind that works when it matters. He volunteers with the AI Innovation Lab and co-host the AI Exploration Series, building platforms designed to put real tools in the hands of the people closest to the problems.

Resources

- Claude Code: github.com/anthropics/claude-code

- Superpowers: github.com/obra/superpowers

- Cornell AI API Gateway: Contact ai-support@cornell.edu for access